Attention and Learning

Zhang & Rosenberg, 2023, Attention, Perception & Psychophysics

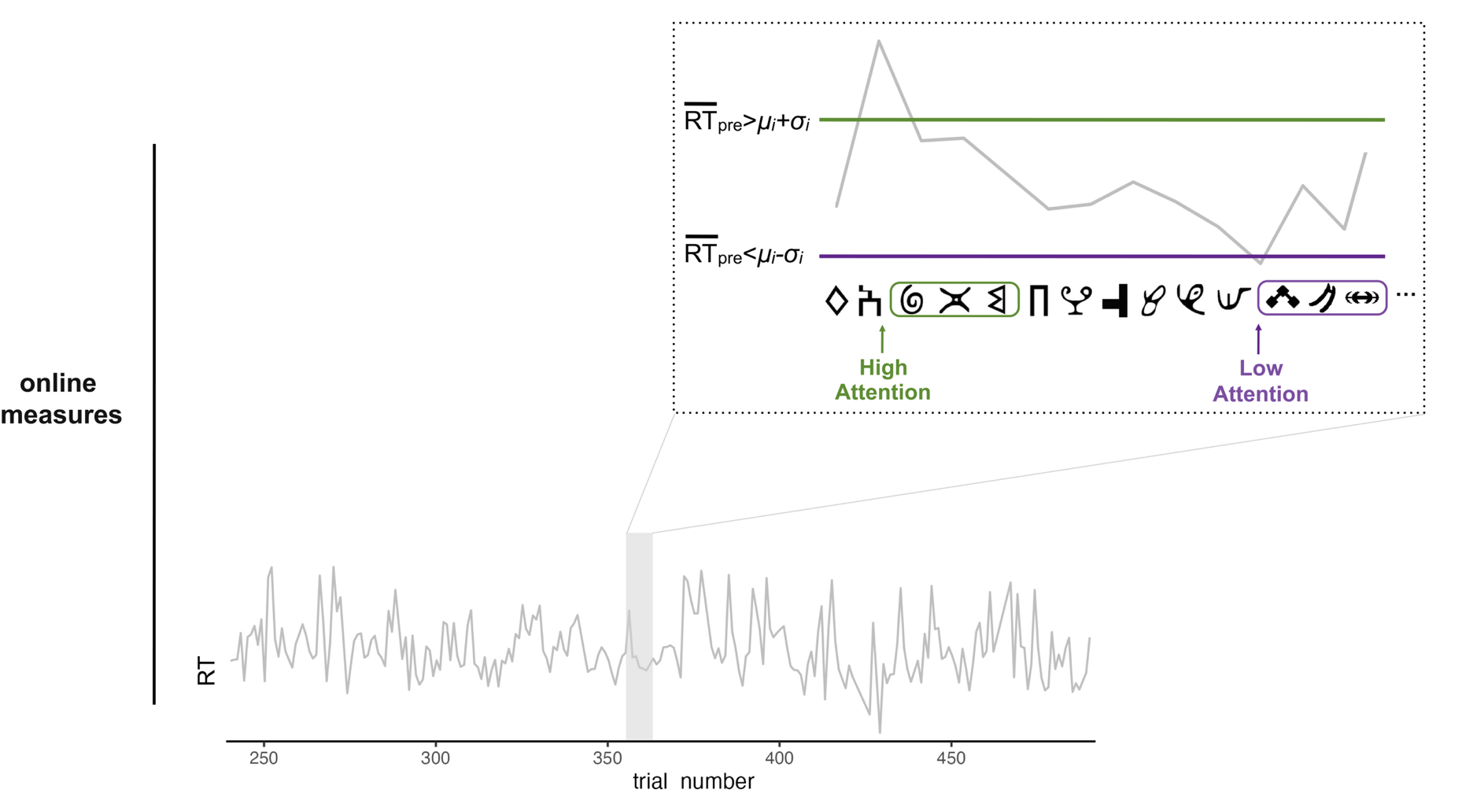

Other than forming expectations about narrative events based on knowledge or experience, we also predict based on knowledge of regularities extracted through a more implicit process—statistical learning. What mental states make us better at extracting regularities from our environment? I designed a web-based real-time triggering paradigm where we manipulated what regularities to learn contingent on each participant’s own attentional state fluctuations.

I found a modest effect that sustained attention impacts the online extraction of regularities but this effect did not persist to offline assessment.

Generalizable Neural Signatures of Surprise

Zhang & Rosenberg, 2024, Nature Human Behavior

A foundational question in psychology and neuroscience is to what extent a certain mental process is domain-general versus domain-specific. Surprise refers to the process of expectation violation, yet can happen in different contexts in the real world. Imagine walking into a restaurant expecting a casual meetup, only to be surprised by a birthday party, or gasping at an unexpected move in a sports game. Understanding whether the brain processes such surprises similarly tells us whether they arise from shared (domain-general) or distinct (domain-specific) cognitive mechanisms.

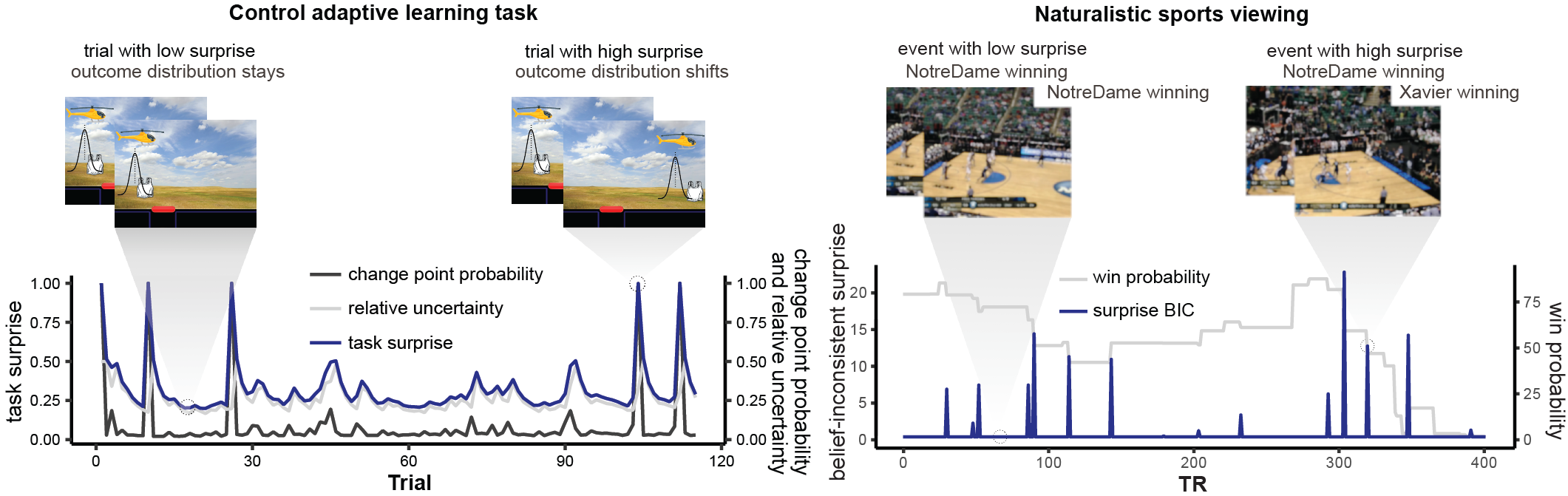

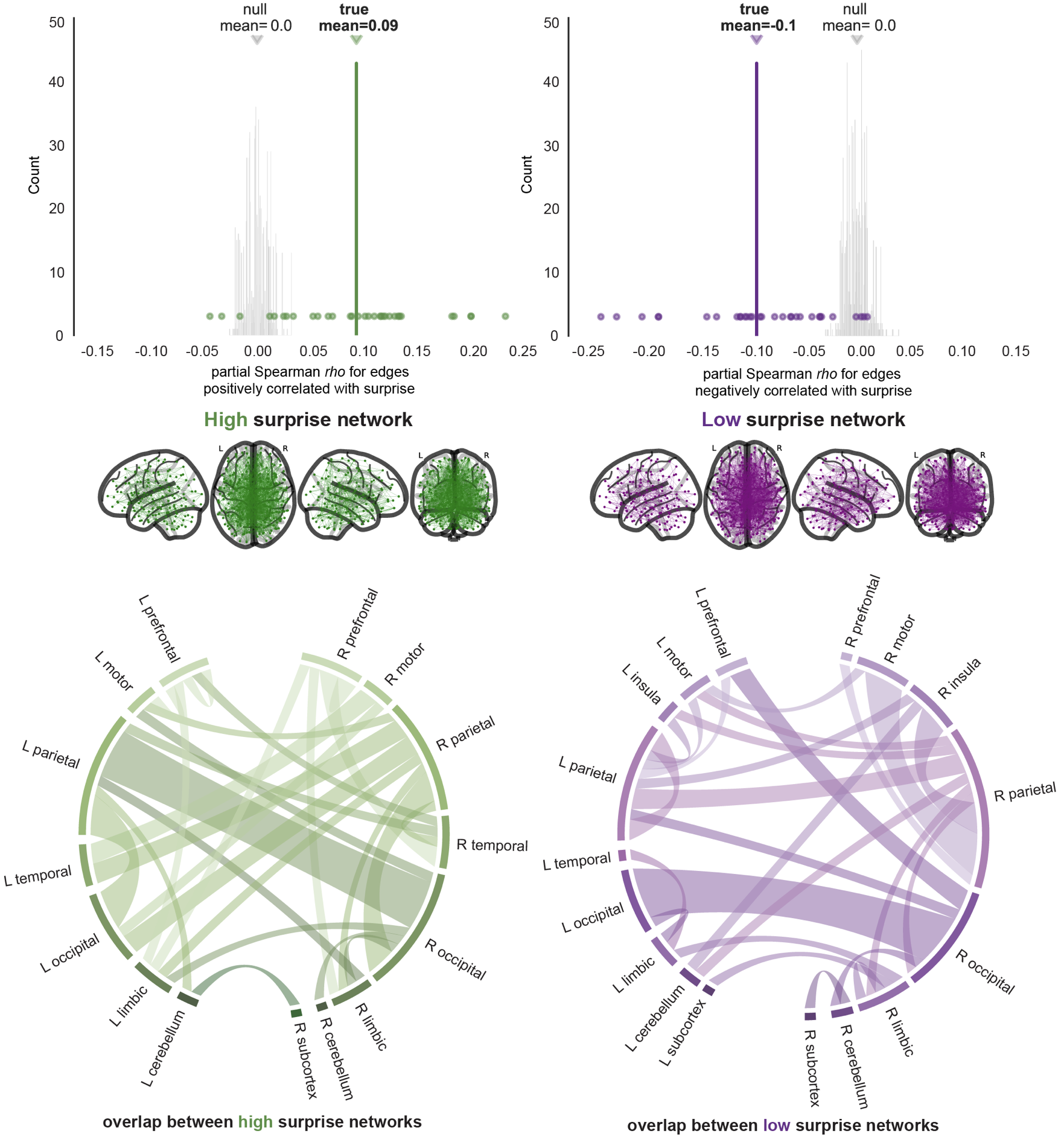

I found evidence that dynamics of a common fMRI network predict people’s belief-inconsistent surprise as they perform an associative learning task, watch suspenseful sports games, and observe cartoon agents behaving unexpectedly.

Linguistic Surprise

But surprise doesn’t come out of nowhere. It is tightly linked to the predictions we form just before the surprising moment, and this is often based on our knowledge or schema about world formed from memory and experience.

Dynamics of this same brain network in Zhang et al. (2024) also predict participants’ self-reported surprise during narrative comprehension. Furthermore, these brain dynamics track prediction error estimates from large language models (LLMs). Her ongoing work tests the generalizability of these relationships across languages.